My first couple months at my new job with Quick Solutions have been wonderful. I work with a bunch of great folks, I’m doing Agile development in a great environment, and I am heads down on project work somewhere between 65% to 80% of my time which is just fantastic. Time formerly spent on the phone trying to swing deals with unlikely prospects has vanished, and the only SOWs I’m involved with writing now are those which have a solid chance of being signed by the customer. I’m still commuting a long time (2.5 to three hours per day), but I’m ridesharing with a great co-worker who’s here on an H1B visa from India — so I get a lot of cool discussion time on wide-ranging topics. (Plus he likes Dot Net Rocks so we’re spending lots of time listening to good podcasts.)

In short, life is good.

That said, I had a hugely ironic deja vu moment when I first started on my current project: it’s amazingly similar to the a technical manual viewer which I worked on years ago. That project cratered due to all the classsic symptoms of a failed IT project: poor development practices (I left a company because of them), weak project managment, and little involvement with the true end users. I was far too emotionally involved with that project because it was targeted to helping active duty Air Force folks work with the scads of tech manuals required in their daily work. I’d been one of those guys for 11 years, and I wanted that viewer to get deployed come hell or high water because I knew how badly the paper tech manuals sucked. Both hell and high water came and the system got put on the shelf without ever being fielded. That sucked.

Obviously it’s something I’m still a little obsessed about, but I’m working on that.

Fast forward six or so years. I start with QSI, spend a couple days sitting in on a terrific SharePoint 2007 Architectural Design Session with a smart guy from Microsoft, then head back to QSI’s dev center to find out what I’ll be working on. I sit down with Jeff, the project engineer, to find out details on the project. Deja vu: it’s another technical data viewer. Since I had previous experience with a system displaying technical manual content, I took a feature for displaying HTML content in a WinForms WebBrowser.

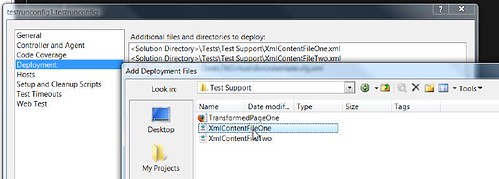

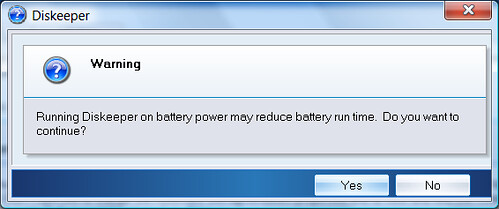

To make a long story short, my feature turned in to a long slog through the poorly documented and very twitchy WebBrowser control, the powerful but somewhat mystical XslCompiledTransform class, and a crapload of work centered around getting poorly authored HTML documents converted into DocBook 5.x format. The work was very frustrating for me because there wasn’t a whole lot of coding involved, just days and weeks spent slogging through standards, documentation, and blog posts. Furthermore, when I could get some coding done it was usually centered around trying to nail down the WebBrowser’s flat-assed WEIRD eventing behavior, particularly when loading content into its Document properties or trying to deal with the infamous “about:blank” URI. Test driven development? Not particularly workable in that context. Ergo, no green lights on the test runner, which is always frustrating for me.

Last week was finally a huge turning point for me. Everything began to fall into place and I’ve made some great progress. I’m getting tests written (first, even!), I’ve got content migrated into DocBook and am storing it in a database and transforming it enroute from the DB out to the browser control. I got a history list working for backward/forward navigation (not available in the WebBrowser control when you’re streaming in content), and I even fired up the Enterprise Library’s Crypto classes to use some DPAPI encryption/decryption to protect the content in the database.

It’s nice to see some dots going on my short list of features on the project wall. Folks on my team have been great about not giving me any serious guff on my lack of progress because they’ve realized how sticky a wicket the feature was. (There’s been some good-natured ribbing on my lack of feature sign-off, but it’s usually been started by me poking fun at myself.)

One tangental note: the feature was badly underestimated and missed the boat with its initial planned complexity, but we were also able to do a serious Agile redirection enroute and make a significant change in the entire feature design to support a huge change in the customer’s needs. Iniitally one of the customer team members had mandated the need to keep content in HTML. We spent a couple weeks going down that road but pushing them to look hard at moving to XML and DocBook. The customer finally agreed and we were able to get them in a much better place both for their current needs as well as getting them in a hugely better place for any future content work they’ll do. Yay for Agile work where everyone’s friendly to requirements change!

I’ll post up some details on the hard knocks I’ve gone through with the WebBrowser, and perhaps write up a bit on how friggin’ easy it is to use EntLib for DPAPI work. You’ve already seen some on my XslCompiledTransform work and I’ll have some more content in that area soon too.

All in all, I’m very, very happy with where I’m at now, particularly since I’m getting some features on the wall with dots on them. (The original feature is still undotted, but it’s getting close to sign off!)